You want to dig deeper and understand some of the intricacies and concepts behind popular machine learning models. However, you’re nothing if not thorough. You’re no stranger to building awesome random forests and other tree based ensemble models that get the job done. You spend more time on Kaggle than Facebook now. You know your way around Scikit-Learn like the back of your hand.

You’ve come a long way from writing your first line of Python or R code. How much information does the event ‘not surrender’ have?Īs you see, the unlikely event has a higher entropy.The simple logic and math behind a very effective machine learning algorithm How much information does the event ‘surrender’ have? Let’s say there is a 75% chance that Nazis will surrender and a 25% chance that they won’t. Thus, we know what happened to total 4 events. When one event happens, it says the other three events didn’t happen. For example, let’s say there are 4 events and they are all equally likely to happen (p = 1/4). When your events are all equally likely to happen and you know that one event just happened, you can eliminate the possibility of every other event (total 1/p events) happening.

Then, if your telegrapher tells you that they did surrender, you can eliminate the uncertainty of total 2 events (both surrender and not surrender), which is the reciprocal of p (=1/2). Lets say there is 50 50 chance that the Nazis would surrender (p = 1/2). Then the question is… Why is 1/p(x) the amount of information? This means 1/p(x) should be the information of each case (winning the war, losing the war, etc). H(X) is the total amount of information in an entire probability distribution. Wait… Why do we take the reciprocal of probability? In real life, news can be millions of different facts. It doesn’t have to be 4 states, 256 states, etc. You can think of variable as news from the telegrapher. In the same way, he would need only 8 bits even if there are 256 different scenarios.Ī little more formally, the entropy of a variable is the “amount of information” contained in the variable. Now your telegrapher would need 2 bits (00,01,10,11) to encode this message. Let’s say there are four possible war outcomes tomorrow instead of two. So, we’re using more than 100 bits to send a message that could be reduced to just one bit. “The war is not over” (8 bits * 19 characters = 152 bits) “The war is over” (instead of 1 bit, we use 8 bits * 15 characters = 120 bits) In 2018, you can send the exact same information on a smartphone typing Your telegrapher told you that if Nazis surrender, he will send you a ‘1’ and if they don’t, he will send you a ‘0’. Let’s say you are a commander in World War II in 1945. Therefore, with 1 bit, we can represent 2 different facts (aka information), either one or zero (or True or False). To understand entropy, we need to start thinking in terms of the “bits”.

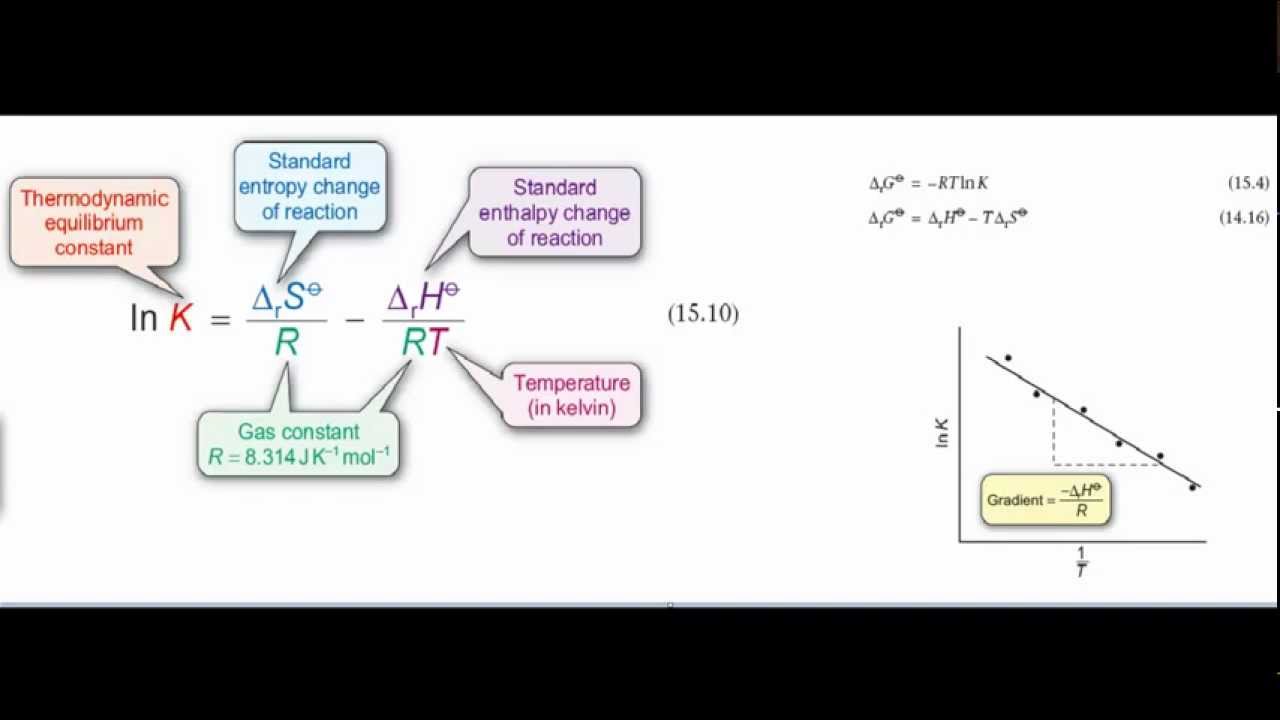

Shannon’s Entropy leads to a function which is the bread and butter of an ML practitioner - the cross entropy that is heavily used as a loss function in classification and also the KL divergence which is widely used in variational inference. The definition of Entropy for a probability distribution (from The Deep Learning Book) But what does this formula mean?įor anyone who wants to be fluent in Machine Learning, understanding Shannon’s entropy is crucial.

0 kommentar(er)

0 kommentar(er)